- Myths of Technology

- Posts

- How are LLMs destroying human language?

How are LLMs destroying human language?

Exploring the impact of growing use of LLMs, how it is harming our collective consciousness, and the need for an educational reform to save humanity

Yesterday, I was listening to this song named “Kun Faya Kun” by A.R. Rahman. Suddenly, I couldn’t hold my tears back. While the man next to me on the flight gave a concerned look, all I could think of was the beauty of the lyrics as the writer begs God to free him from his own self.

There is something very counterintuitive about attaining a higher self through submission to an external force.

But can an LLM ever understand these human experiences at all? What happens when they regurgitate words without understanding their origin? And how does it affect people like you and me?

Let’s find out! But first, take a look at the scale of the problem we’re discussing:

Today, around 66% of people worldwide use AI, including LLMs, on a regular basis. ChatGPT alone has 501 million monthly users globally, holding roughly 74.2% of the LLM market share. We're witnessing the largest shift in human communication since the printing press, yet most of us barely notice it happening.

How did we start talking?

There are endless theories around how we reached here, and the researchers have been studying the evolution of language for centuries. But Dr. Dean Falk, an American neuroanthropologist, proposes that the answer might lie in the "putting-down-the-baby" hypothesis.

The hypothesis suggests that “vocal interactions between early hominid mothers and infants began a sequence of events that led, eventually, to human ancestors' earliest words.” Human babies could not cling to their mothers like chimps, monkeys, or other mammals because we lack fur. This meant that mothers had to put the babies down to forge or move around for work, making the babies seek reassurance that they weren’t being abandoned. So, mothers responded by developing a communicative system that included facial expressions, body language, touching, patting, caressing, tickling, and emotionally expressive contact calls.

"Chronos and His Child" by Giovanni Francesco Romanelli depicts the Titan Cronus as Father Time, wielding his harvesting scythe while consuming his own child. The painting captures the fundamental paradox of time, which is that the same force that creates also destroys. Humanity is reaching a similar stage where we’re on the brink of destroying what we’ve spent centuries building. (Courtesy: wikipedia)

The existence of a language depends on how easily it can be adopted by the next generation. This also means that languages die if they cannot be easily acquired by young children under the normal conditions of social life.

Therefore, humans have a biological instinct to shape language to cater to the next generation. Every language evolves to serve our needs, making it a fundamental tool for our survival as a species. This evolutionary pressure on language assumes that the speakers actually understand what they're saying. But what happens when an LLM regurgitates words it doesn’t understand?

The words that mean nothing

LLMs are trained on vast amounts of textual data to predict the next word in a sentence based on the context provided by the preceding words. This means that every AI-generated word is mostly just a “good enough” guess.

While humans use language to communicate with an intention behind every word, LLMs just spit what they’re trained on. Even the most sophisticated generated essays, at best, can only carry the intention of a very short prompt given to the LLM. This means that anyone who reads it interprets something you never said.

The words an LLM generates carry no meaning. They carry no depth, no intention, and no inherent purpose.

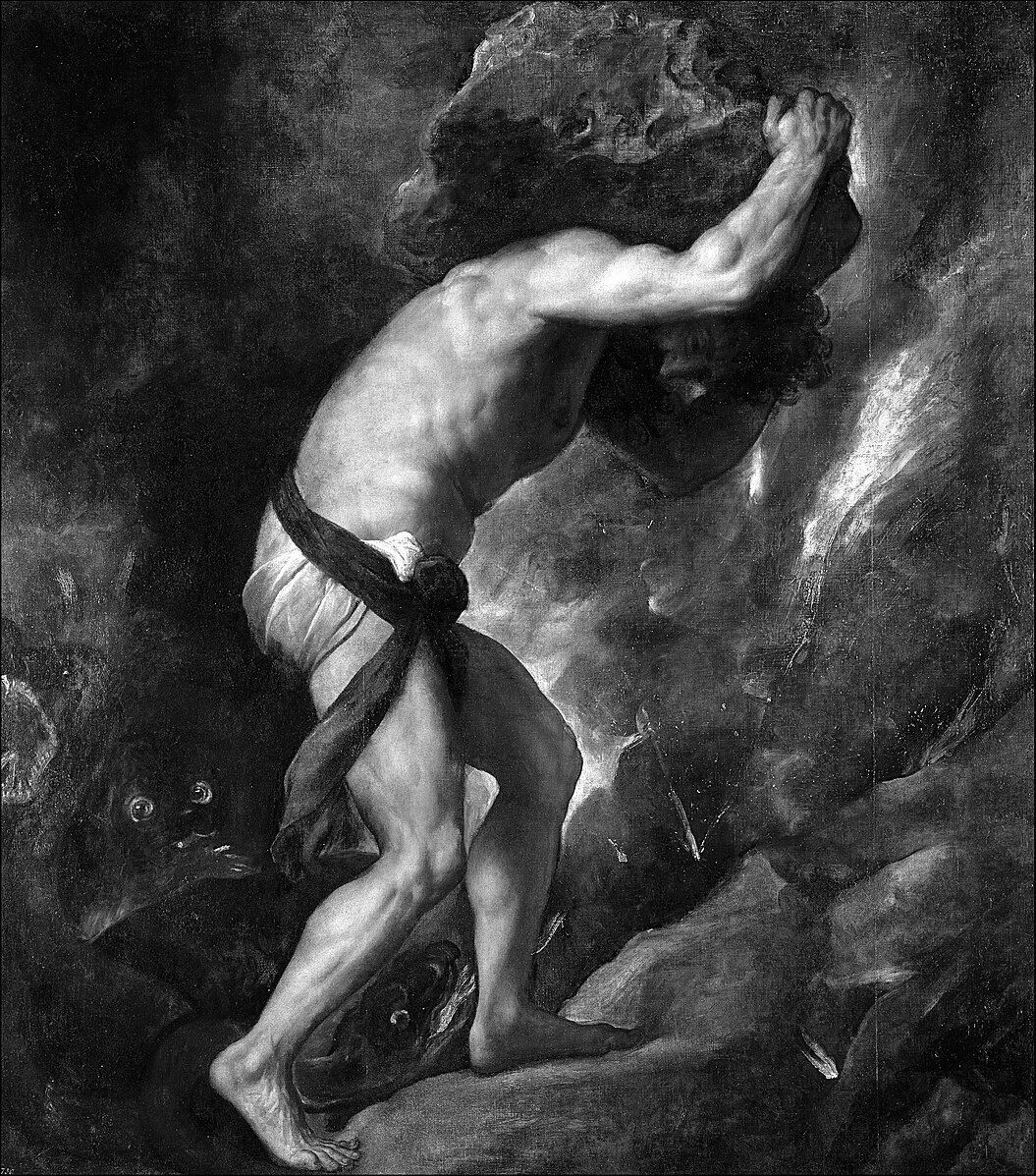

"The Myth of Sisyphus" depicts the king of Ephyra condemned by Zeus to eternally push a boulder uphill, only to watch it roll back down each time he nears the summit. This eternal cycle of futile labor has become a perfect metaphor for using AI models to do our work that seems meaningful yet ultimately leads nowhere. (Courtesy: wikipedia)

When I write "heartbreak," your mind processes way more than just ten letters. It activates neural networks tied to memory, emotion, and embodied experience. You might remember the weight in your chest during a difficult goodbye, the way silence felt louder than noise, or how familiar places suddenly seemed foreign.

This is something that LLMs fundamentally cannot access.

When ChatGPT writes "I understand your frustration," it doesn't actually understand anything. It has only learned that the phrase typically appears in contexts marked by negative sentiment indicators. And that’s the beginning and the end of it.

The aftermath to avoid

We’re witnessing what we can call "the great flattening" of human communication. A friend recently very aptly pointed out that everyone has started to sound like an LLM. As we increasingly rely on AI to write emails, summarize long DMs, or reply politely, we’re homogenizing how we express ourselves.

For example, LLMs tend to overuse hyperbole and metaphors like “groundbreaking,” “vital,” “esteemed,” “weaving” a “rich” or “intricate tapestry”, “painting” a “vivid picture”, etc. This means that we’ve started to use a standard template to appreciate things, instead of paying attention to how we describe something for what it is.

Language shapes how we think and who we become. But when our linguistic tools become mechanical, so does our thinking process. Just as agriculture reduced crop diversity, making some species extinct, the democratization of LLM will threaten to reduce the variety of human thought.

We might end up with a hyper-sanitized version of languages, and hence, a few emotions optimized to benefit whoever controls the technology.

What’s next?

Language exists as the space between our minds. But when that space gets flooded with words, thoughts, emotions, and intentions that are not ours, we end the feedback loop that keeps languages alive.

As AI-generated content floods the internet, authentic human expression will only become more sacred. This will require better thinkers, increasing the responsibility on the few who are willing to take it. This calls for an educational reform to protect our ability to produce original thought.

We must protect the spaces that are exclusively human. We must continue to learn how to think. We must do whatever it takes to develop critical thinking, emotional intelligence, and the capacity for nuanced expression.

"Satan Before the Throne of God" by William Blake depicts the confrontation between rebellion and divine authority, showing the sacredness of being understood. Satan, in his defiance, stands before the God who truly understands his motivations, his pain, and his fall from grace. This divine recognition transforms Satan's opposition into a meaningful encounter. While LLMs simulate “understanding” with increasing sophistication, they really cannot comprehend of our struggles, fears, and hopes. Perhaps what makes human connection irreplaceable is the gift of being authentically understood by another conscious being. (Courtesy: metmuseum)

Most importantly, we must remember why human language matters. Every word we speak or write carries the weight of our experience, the specificity of our perspective, and the possibility of a genuine connection with another consciousness.

When I heard "Kun Faya Kun" on that flight, the tears came not from the words alone but from their resonance with my own struggles with surrender, identity, and transcendence. No LLM, no matter how sophisticated, can replicate that moment of being understood by another, making language a bridge between one soul and another.

The question is whether we have the wisdom to not destroy it as we navigate the age of AI.

For the first time in history, our voices can reach millions instantly. The question is, do we have the courage to speak our imperfect truths, or will we surrender our authenticity under the pressure to speak seemingly perfect words?

Reply